Researchers at the University of Connecticut and their colleagues have found that what happens inside the brain when reading is the same no matter what the structure of a person’s written language, and that it is influenced by the same mechanism the brain uses to develop speech.

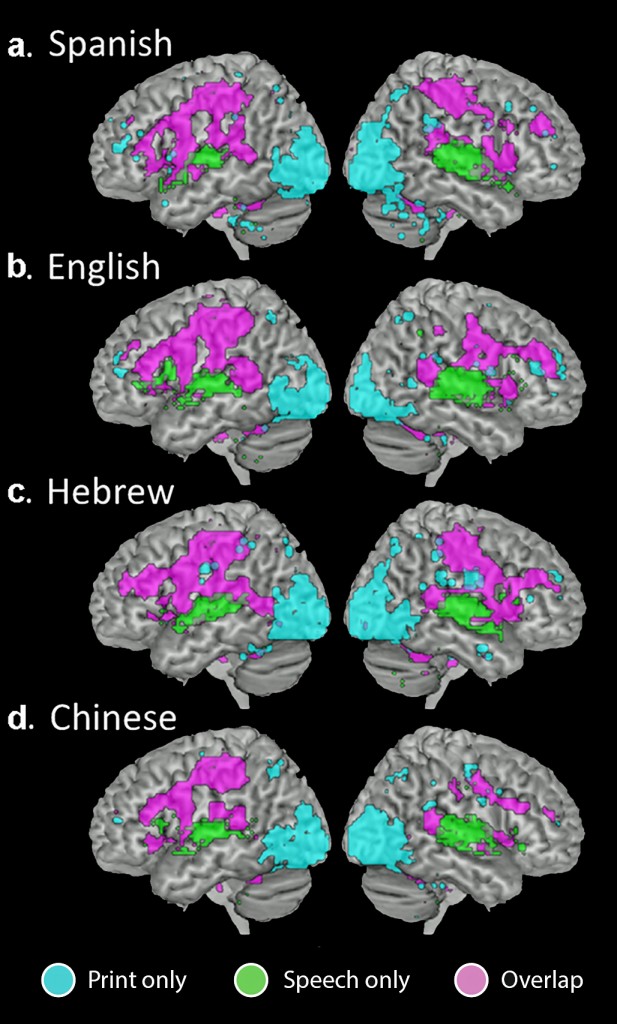

The study, based on evidence from functional MRI (fMRI) images of participants using four contrasting languages, was published in the Proceedings of the National Academy of Sciences in November.

Lead author Jay Rueckl, director of UConn’s Brain Imaging Research Center, says ‘reading is parasitic to speech’ – meaning that the brain’s activity while reading involves speech and print mechanisms rather than a simple response evoked by print alone.

By comparing fMRI scans of 84 participants in four countries with highly contrasting languages – Spanish, English, Hebrew, and Chinese – the authors found that brain regions in the left hemisphere, including Broca’s area (the region of the brain that influences the production of speech) and Wernicke’s area (the region that influences the understanding of speech), were active both for the comprehension of printed words and for the comprehension of speech.

This strikingly similar neural organization was present despite dramatic differences in writing systems, including whether written words are alphabetical (such as English, Hebrew, or Spanish) or logo-graphic (such as Chinese), and regardless of the sound structure or the look of written characters.

Rueckl, an associate professor of psychological sciences, says the findings show that regardless of how spoken forms and their meanings are represented in a given writing system, proficient reading entails the convergence of speech and written systems onto a common network of brain structures.

This means that written languages have evolved to provide readers with maximal cues about spoken words and their meaning, and that the brain is capable of organizing both the spoken and written aspects of a language in a symbiotic system that facilitates a person’s ability to read.

The findings support a body of work that says a phonetical approach to reading – emphasizing the relation between written words and the sounds of the spoken words they represent – is crucial for successful instruction and intervention.

Rueckl notes that reading is not something that people have naturally evolved to do. “To learn to read, you have to take a brain that is set up to do some specific kinds of things and do something else with it. The neural system that allows us to learn to read is highly influenced by what else the brain is doing at the same time.”

Rueckl and his colleagues at the Haskins Laboratories in New Haven are studying dyslexia – a common learning disability where the brain has trouble matching letters with their sounds and with recognizing the combinations that letters make on the written page. He explains that this fMRI study supports a long line of research showing that the condition is largely a phonological problem, because dyslexic children have problems coding the sounds of language.

“fMRI is pivotal for both basic and translational research,” he says. “For instance, the information we have gathered from this study will help us answer the question of, when you provide intervention and a child gets better at reading, does their brain system look like the typically developing system, or is it doing something else – compensating or developing a new strategy?

“This information will go a long way in helping us decide on the best course of action for helping these children become proficient readers.”